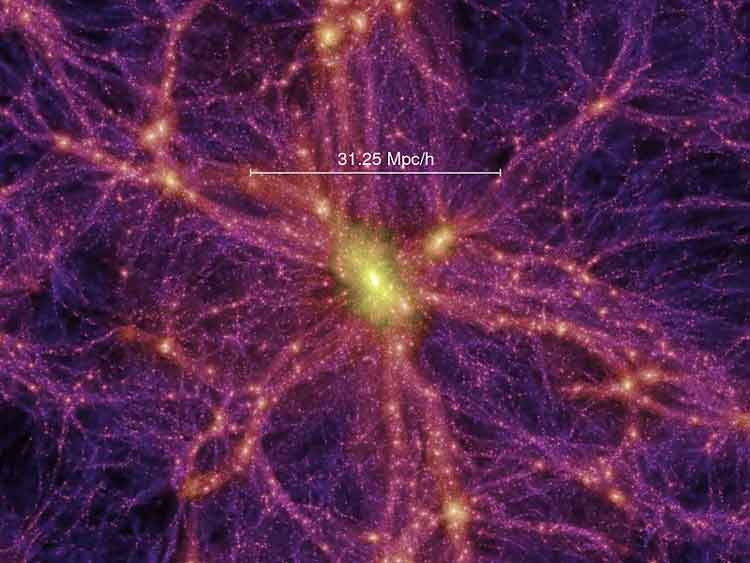

The Millennium Run simulates the universe until the present state, where structures are abundant, manifesting themselves as stars, galaxies and clusters (*)

An N-body simulation is a simulation of massive particles under the influence of physical forces, usually gravity and sometimes other forces. They are used in cosmology to study processes of non-linear structure formation such as the process of forming galaxy filaments and galaxy halos from dark matter in physical cosmology. Direct N-body simulations are used to study the dynamical evolution of star clusters.

Direct gravitational N-body simulations

In direct gravitational N-body simulations, the equations of motion of a system of N particles under the influence of their mutual gravitational forces are integrated numerically without any simplifying approximations. The first direct N-body simulations have been carried out by Sebastian von Hoerner at the Astronomisches Rechen-Institut in Heidelberg (Germany). Sverre Aarseth at Cambridge University (UK) dedicated his entire scientific life to the development of a series of highly efficient N-body codes for astrophysical applications which use adaptive (hierarchical) time steps, an Ahmad-Cohen neighbour scheme and regularization of close encounters. Regularization is a mathematical trick to remove the singularity in the Newtonian law of gravitation for two particles which approach each other arbitrarily close. Sverre Aarseth's codes are used to study the dynamics of star clusters, planetary systems and galactic nuclei.

General relativity simulations

Many simulations are large enough that the effects of general relativity in establishing a Friedmann-Robertson-Walker cosmology are significant. This is incorporated in the simulation as an evolving measure of distance (or scale factor) in a comoving coordinate system, which causes the particles to slow in comoving coordinates (as well as due to the redshifting of their physical energy). However, the contributions of general relativity and the finite speed of gravity can otherwise be ignored, as typical dynamical timescales are long compared to the light crossing time for the simulation, and the space-time curvature induced by the particles and the particle velocities are small. The boundary conditions of these cosmological simulations are usually periodic (or toroidal), so that one edge of the simulation volume matches up with the opposite edge.

Calculation optimizations

N-body simulations are simple in principle, because they merely involve integrating the 6N ordinary differential equations defining the particle motions in Newtonian gravity. In practice, the number N of particles involved is usually very large (typical simulations include many millions, the Millennium simulation includes ten billion) and the number of particle-particle interactions needing to be computed goes like N2, and so ordinary methods of integrating numerical differential equations, such as the Runge-Kutta method, are inadequate. Therefore, a number of refinements are commonly used.

One of the simplest refinements is that each particle carries with it its own timestep variable, so that particles with widely different dynamical times don't all have to be evolved forward at the rate of that with the shortest time.

There are two basic algorithms by which the simulation may be optimised.

Tree method

* In a tree method such as a Barnes-Hut simulation, the volume is usually divided up into cubic cells in an octree, so that only particles from nearby cells need to be treated individually, and particles in distant cells can be treated as a single large particle centered at its center of mass (or as a low-order multipole expansion). This can dramatically reduce the number of particle pair interactions that must be computed. To prevent the simulation from becoming swamped by computing particle-particle interactions, the cells must be refined to smaller cells in denser parts of the simulation which contain many particles per cell.

Particle mesh method

Another possibility is the particle mesh method in which space is discretised on a mesh and, for the purposes of computing the gravitational potential, particles are assumed to be divided between the nearby vertices of the mesh. Finding the potential energy Φ is easy, because the Poisson equation

![]()

where G is Newton's constant and is the density (number of particles at the mesh points), is trivial to solve by using the fast Fourier transform to go to the frequency domain where the Poisson equation has the simple form

![]()

where ![]() is the comoving wavenumber and the hats denote Fourier transforms. The gravitational field can now be found by multiplying by

is the comoving wavenumber and the hats denote Fourier transforms. The gravitational field can now be found by multiplying by ![]() and computing the inverse Fourier transform (or computing the inverse transform and then using some other method). Since this method is limited by the mesh size, in practice a mesh is used or some other technique (such as combining with a tree or simple particle-particle algorithm) is used to compute the small-scale forces. Sometimes an adaptive mesh is used, in which the mesh cells are much smaller in the denser regions of the simulation.

and computing the inverse Fourier transform (or computing the inverse transform and then using some other method). Since this method is limited by the mesh size, in practice a mesh is used or some other technique (such as combining with a tree or simple particle-particle algorithm) is used to compute the small-scale forces. Sometimes an adaptive mesh is used, in which the mesh cells are much smaller in the denser regions of the simulation.

Two-particle systems

Although there are millions or billions of particles in typical simulations, they typically correspond to a real particle with a very large mass, typically 109 solar masses. This can introduce problems with short-range interactions between the particles such as the formation of two-particle binary systems. As the particles are meant to represent large numbers of dark matter particles or groups of stars, these binaries are unphysical. To prevent this, a softened Newtonian force law is used, which does not diverge as the inverse-square radius at short distances. Most simulations implement this quite naturally by running the simulations on cells of finite size. It is important to implement the discretization procedure in such a way that particles always exert a vanishing force on themselves.

Incorporating baryons, leptons and photons into simulations

Many simulations simulate only cold dark matter, and thus include only the gravitational force. Incorporating baryons, leptons and photons into the simulations dramatically increases their complexity and often radical simplifications of the underlying physics must be made. However, this is an extremely important area and many modern simulations are now trying to understand processes that occur during galaxy formation which could account for galaxy bias.

See also

* Millennium Run

* Structure formation

* Large-scale structure of the cosmos

* GADGET

* Galaxy formation and evolution

* N-body problem

* natural units

* Virgo Consortium

N-body problems and models, Donald Greenspan

External links

* N-body Simulations on Scholarpedia

References

- Sebastian von Hoerner (1960). "Die numerische Integration des n-Körper-Problemes für Sternhaufen. I". Zeitschrift für Astrophysik 50: 184, http://adsabs.harvard.edu/abs/1960ZA.....50..184V.

- Sebastian von Hoerner (1963). "Die numerische Integration des n-Körper-Problemes für Sternhaufen. II". Zeitschrift für Astrophysik 57: 47, http://adsabs.harvard.edu/abs/1963ZA.....57...47V.

- Sverre J. Aarseth (2003). Gravitational N-body Simulations: Tools and Algorithms. Cambridge University Press.

- Edmund Bertschinger (1998). "Simulations of structure formation in the universe". Annual Review of Astronomy and Astrophysics 36: 599–654. doi:10.1146/annurev.astro.36.1.599, http://arjournals.annualreviews.org/doi/abs/10.1146%2Fannurev.astro.36.1.599.

- James Binney and Scott Tremaine (1988). Galactic Dynamics. Princeton University Press. ISBN ISBN 0-691-08445-9.

- A survey of all known N-body simulation methods

Retrieved from "http://en.wikipedia.org/"

All text is available under the terms of the GNU Free Documentation License